Modern airplanes travel the world through all kinds of weather via the use of radar. Oxford Dictionary defines radar as “a system for detecting the presence, direction, distance and speed of an object. Radar is used to indicate there is something that has not yet come to the attention of a person or a group.”

We have all heard the expression about “being off the radar,” meaning something is out of sight and mind.

Radar technology is designed to alert all stakeholders to potential risks so that changes can be made to eliminate, or at least, minimize the impact of the risks. What is meant by all stakeholders here? Clearly the pilot and the co-pilot of the plane are primary users of the aircraft radar equipment.

But what about the crew, the passengers, and the families of all involved? They are also key stakeholders of an effective radar system. Ships and other sea-going vessels use sonar. Sonar provides visibility to see what lies beneath.

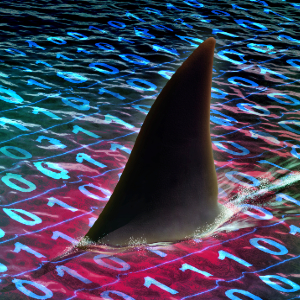

So, what do radar and sonar have to do with detecting hidden data risks? Radar provides the ability to see what lies ahead in the sky or land. Forecasting and analytic engines are examples of radar-like systems used in today’s organizations to predict some future outcome (e.g. 5-year cash flow projection). In comparison, sonar-like systems can reveal what lies beneath the surface of the data and information landscape. Radar and sonar are both types of detection systems. Their task is to continually monitor the surroundings, which is a critical aspect of risk management.

Let’s focus on sonar. The risk management aspect akin to radar is content for another day. Today’s corporations are literally oceans of data. The sheer amount of data is increasing at increasing rates. But what risks are lurking in the data? What data issues are undetected? What data issue is about to manifest in a very costly business decision or result in the company getting an audit finding, or even worse, a material weakness potentially impacting their profitability and finances? What lies beneath?

We were on a call a few days ago with a client that is a large wholesale mortgage lender. The purpose of the call was to explain a new data quality control that showed a variance in the data. The root cause of the problem was that an external data provider had a system issue that they were not able to fix, resulting in duplicate records. The duplicates caused primary key violations for the client’s data warehouse that required manual intervention. This client has tried unsuccessfully for months to get the data provider to fix their data. To at least resolve the symptom, we “coded around” the duplicate record so the warehouse would load. The team had confirmed there was no dollar impact with the duplicate records. So, day after day, the feeds from the external provider are processed and the duplicate records are bypassed.

All seemed OK, but there was the ever-present risk that bypassing a record could result in a variation to a customer balance. While manual data review had shown no financial impact of the duplicates, what about the risk of a lights-out application processing unknowns in the data? Back to our ever-present angst, what lies beneath? To mitigate this risk, we added a simple data quality control leveraging our governance engine. The control asked the simple question, “Did the code skip any records today?” If the answer is yes, the Data Owner is notified so they can take remediation action to confirm there is no hidden risk in the data.

It is a common practice to code around known data problems. It is less common to leverage a risk management tool like our governance engine to continually monitor the state of the data and send an alert. On today’s sea vessels, sonar is looking for masses under the water. For this client, the control engine was looking for patterns in the data that represented potential issues, or risk, the quality and integrity of data. In Data Governance speak, the stakeholders here were the Data Owners, Data Stewards, and the often-overlooked Data Consumers both internal and external to the organization.

There is an emerging focus on data risk management. There has always been emphasis on the different types of organizational risk. Organizations have strict policies and procedures to mitigate operational risk, regulatory risk, credit risk, etc. Complex derivatives are used to hedge against currency and interest rate risk. But what about the risk that lies hidden in the data? The best analysis in the world is only good as the underlying data. Financial disclosures are certified with fingers crossed the data used in the reports is valid. Unknowns in the data are a very significant risk, and this risk is increasing exponentially along with the volume of data.

My company has turned our interest and passion for data risk management into technology-enabled solutions that provide clear visibility to hidden data risks, awareness of the registered governance stakeholders, and predictable actions needed when data risks are detected. As organizations continue to navigate to their future, it is critical to provide operational systems analogous to radar and sonar to discover the hidden data risks before easily avoided, expensive mistakes are made.