There are many ways to conduct a tender process for evaluating and selecting commercial software. With many new business projects looking to buy or rent a solution rather than build one, it is

worth considering:

- What makes an effective tender process?

- What artefacts are prepared to select commercial software?

- Will it deliver an auditable process that fulfils all corporate governance rules?

The guide to better software evaluation has resulted from a number of successful product selections to the value of $12 million. The full process, from project initiation to selection, can take

between 50 – 80 days to conduct.

Figure 1: Indicative Project Plan

The activity duration ranges, outlined above, allow for variations in project scope, i.e., number of business process involved, number products in the short list, typically 3-4, and the level of

the written response required from the vendor at the evaluation stage. A description of the primary activities performed during the project is outlined in the following table.

Figure 2: Tender Process

The key benefits of this tender process relate to stakeholder ownership, insight into how the solution will improve the business process and the benefits that will be realised through those

improvements.

Requirements Approach

A facilitated workshop approach is used to prepare the high-level process and data requirements. They are prepared using agile methods, which involves cross-functional participation from key

business stakeholders.

Figure 3: Workshop Programme

Business models are used to brainstorm and test common understanding of the processes conducted and data used by the different stakeholders. Once these models stabilise, then the specification is

prepared to an appropriate level of detail.

The criteria for assessing the solutions are derived from the requirements by highlighting key sentences.

- Highlight Mandatory Criteria [green]

– Used sparingly to identify fundamental criteria, e.g., software must support a multicompany business model (2 – 3 only).

– All submissions must comply with the mandatory requirements or be rejected.

- Highlight Differentiating Criteria [yellow]

– Critical, important and possibly desirable requirements that will be used as evaluation hurdles.

Each statement is translated into single functional criterion by prefixing it with “ability to…” These statements can be extended by clarifying any issues that need to be

resolved or benefits expected by automating a criterion. Traceability is maintained between the assessment criteria and business requirements specification to communicate a full contextual

description of the requirement to the vendor.

Only important requirements that will differentiate between software products are used in the evaluation scorecard in order to minimise the number of criteria that need to be

assessed. Technical and vendor criteria are based on company’s standard technology environment and purchasing guidelines. Normally these criteria are assessed against written responses.

Ideally an evaluation panel should only have to assess 50 to 80 functional criteria in a single day’s demonstration. This is a sufficient number of criteria to assess the functionality of a

single point solution, such as Campaign Management or Content Management Solution. If the number of criteria exceed this guideline, then they can be further prioritised by ranking their importance

and likelihood that the criteria will be a true product differentiator, i.e., if every product is know to deliver a specific capability then it will not be suitable differentiating criterion.

Larger product evaluations, such as an ERP solution would involve a series of evaluations by each functional area.

Artefacts and Deliverables

The primary artefacts produced during the software evaluation include the high-level business requirements for each process within the project’s scope:

- Function: Process specification, existing issues and associated requirements.

- Data: Data model and associated requirements.

- Non-functional: Features, usability, reliability, performance and standards.

- Interfaces: System integration architecture data map.

- Assessment criteria: 50 – 80 functional criteria derived from the business requirements.

- Test data: Scenario-based process test cases.

A comparison of each candidate option is made against the requirements to identify the degree of functional fit and how well the solution conforms to the target architecture. Also the indicative

costs, project timescales, pros and cons, risks and major benefits are prepared for each of these options.

The final reports written to recommend a way forward include business requirements, assessment report, architecture report, business case, solution implementation strategy and next phase project

initiation document.

Assessment Method

The software evaluation activity involves inviting a number of commercial software vendors to present their software product(s) against the requirements and assessment criteria. These

demonstrations allow the business panel to assess the solutions on offer, gain confidence in the vendor’s capability to execute and also provide a forum for estimating an indicative project

cost.

The high-level qualification criteria prepared from the business requirements are used to assess the software packages and to determine the best sourcing strategy for delivering the solution. The

steps undertaken during the demonstrations include:

- Viewing the software in action during the vendor product demonstration and clarifying any concerns.

- Rating the capability demonstrated against the criteria by determining the degree of fit (0 – 3).

Figure 4: Simple Scoring

- Recording key conclusions to supplement the argument for justifying the recommendations, for example:

– Pros: A capability that the assessors felt confident about, e.g., flexible definition of product selection rules for list generation.

– Cons: Key points that may lead to a show stopper, e.g., no facility to develop standard templates to minimise data entry.

Low ratings (0 – 1) provide the basis for estimating any additional work required resolve solution gaps.

Figure 5: Sample Demo Agenda

The vendor demonstrations are typically scheduled over single week. Each demonstration is conducted according to a strict agenda allowing a short time for the vendor to pitch the virtue of their

company, but leaving most of the time for them to demonstrate how their solution will support the business process requirements. The vendor uses the test data provided to demonstrate automated

processes, whilst elaborating on each criteria being assessed. Following this approach allows the evaluation panel to view software under their expected operating conditions.

At the end of the vendor demonstration, the evaluation panel meets to finalise their scores. All individual scores are entered in the spreadsheet. This quick presentation of the quantitative

results allows the team to assess the functional fit and discuss any significant discrepancies on specific criteria with a large range between personal scores.

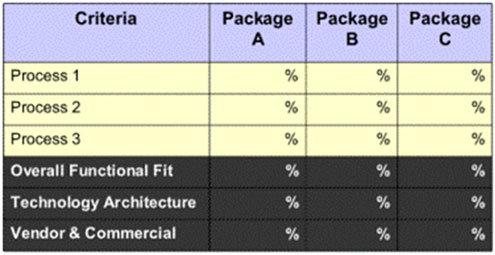

Figure 6: Scorecard Summary

Once the overall score is known and major discrepancies considered, the panel is asked to list the headline strengths and weaknesses they identified during the evaluation. These strengths and

weaknesses are based on the pros and cons recorded against the low and high scoring criteria. The headline items are identified by conducting the following questioning sequence.

- What are the primary features of the solution that will significantly improve the way we do business, i.e., what are the key enablers? How or why will they lead to improvement?

- What concerns did the product descriptions raise or where does the uncertainty lie?

- What was different about these applications compared to your current systems?

- What would your sourcing recommendation be regarding the package solution assessed?

- How would you rate your confidence in the vendor’s ability to deliver the business benefits sought given the qualitative capability (evaluation score), the credibility the vendor

established during the demonstration and the headline strengths and weaknesses discussed?

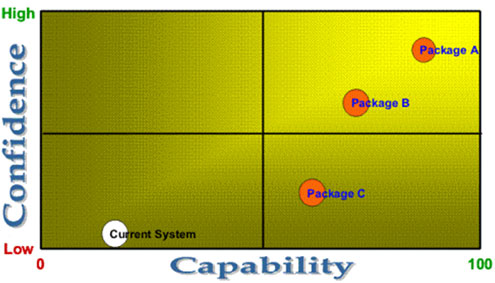

The Confidence Capability Matrix is used to estimate the team’s conviction in the vendor’s ability to execute a successful project.

Figure 7: Capability Confidence Matrix

If time permits it is also worthwhile conducting an evaluation of the current system to provide a benchmark of the improvement expected from each of the package solutions evaluated.

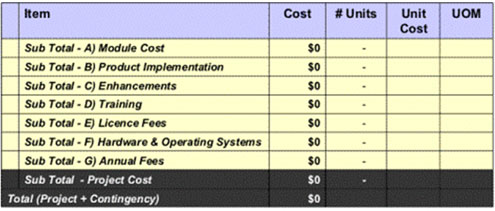

Cost Modelling Approach

The cost model is developed in two stages. In the first stage, the vendor is asked to provide a vanilla cost estimate based on the project scope statement and standard list prices. This includes:

- Expected modules, based on process scope.

- User licence fees, based on the initial system user estimate.

- Indicative implementation cost, given the vendor’s past experience on similar sized projects.

- Typical hardware platform, given indicative data volumes and user numbers.

- Standard maintenance fees, typically 15-18% of the module, license and/or enhancement fees.

The second stage seeks clarification of the initial vanilla pricing. It also aims to obtain a planning estimate of any enhancements required to rectify the gaps identified from the low scoring

criteria. The revised pricing model is based on detailed requirements provided and the shared learning gained during the product demonstrations and questioning sessions. Finally the internal

project cost estimates are added to the cost model.

Figure 8: Cost Model Template

This cost model is intended to provide sufficient detail to establish a project budget. The budget will be revised at the end of the vendor functional analysis activities (discovery process), where

more detail about the requirements is known in order to gain a firm price or potentially a fixed pricing arrangement for the approved enhancements.

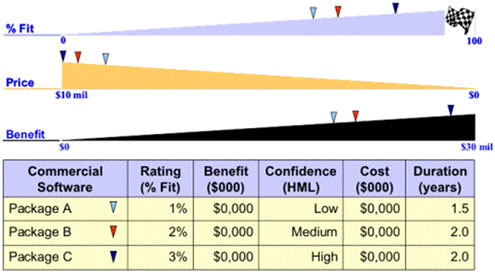

Package Evaluation Summary

The challenge for management is to decide which initiatives will take the company successfully forward into the new age and to determine which initiatives underway deserve continued support.

This software evaluation process provides management with a sound proposal to debate. The debate centres around making an informed decision on which solution provides the best functional fit taking

into account the cost of the options assessed and the effect product short falls will potentially have on realising the project benefits.

For example, the sample evaluation in Figure 9 illustrates how the most expensive package can be the best solution, when it resolves a greater number of process issues that have a direct impact on

the benefits model.

Figure 9: Evaluation Summary

In addition to providing a decision-making framework for selecting the most appropriate solution, this collaborative software evaluation process provides many soft benefits that will directly lead

to a successful implementation.

- Strong ownership and sense of accomplishment by the stakeholders that participated in the requirements analysis and vendor assessment.

- A change management team that understands the strengths and weakness of the solution selected, the process changes that will arise from implementing the solution and benefits that need to be

achieved to deliver a successful project. - A strong stakeholder network that can communicate the importance of implementing a new solution and influence a larger user community that will be affected by the change.

- A good foundation for partnering by helping the vendor to gain insight into the business requirements and the project benefits expected.

Finally, the reports produced during the evaluation provide a full audit trail that would pass any governance process. In a recent project conducted the project recommendation passed all programme

office audits conducted including third party business consultancies (two), the internal auditor and the legal probity auditor.

References:

- Streitberger, Stephan (2007) A Guide to Better Business Requirements, TDAN.com.

- Lauesen, Soren, (March/April, 2003) Task Descriptions as Functional Requirements, IEEE Software.

- Peterson, K., (April, 2003) Scripted Scenarios Improve the Software Selection, Gartner.

- Rational Software, (2001), Business Modelling with UML and the Rational Suite Analyst Studio, Rational White Paper