Recently, I’ve been digging into what the future of work and the workforce might be in a post-GenAI world. Right now, organizations are experimenting with and adopting various forms of AI, GenAI, and agentic AI.

We are seeing the impact of this in recruitment in areas such as customer service (replaced with chatbots), management consulting, and software development as company leadership seeks the short-term dopamine hit of improved profit in a quarter or fiscal year. And it may be that we are laying the foundations for a problematic future.

Learning Ladder Collapse and AI Errors

The traditional organizational structure has been a pyramid: Lots of junior staff enter the organization, with fewer people needed at middle management, and a smaller number again at the most senior levels. The shifts in recruitment suggest that organizations are moving toward either a diamond-shaped model with a small junior level, a larger middle management level, and a small senior leadership team or an “hour-glass” model where there are a large number of junior staff who have more autonomy in their work, a smaller middle management layer, and a broader senior management function.

The promise is that generative AI and agentic AI will support this shift by allowing the entry-level “grunt work” to be handed over to the machines that can do it (it’s claimed) faster, cheaper, and more accurately. Much like North Haverbrook in the famous Simpsons “Monorail” episode, companies adopting these approaches are apparently reaping early and immediate benefits. But, much like North Haverbrook in the famous Simpsons “Monorail” episode, the long-term impacts might not be as promising or sustainable as the promoter might suggest.

Research by Cap Gemini in 20241 surveyed 1,500 managers and 1,000 staff in organizations. The study found that most respondents across all groups felt that entry-level roles in the next three years will move from creating content to reviewing output. Seventy-one percent of employees agreed with this statement, compared to an average of 63% of management/leadership roles. In effect, entry-level roles will be more akin to what would today be considered supervisory roles.

It’s important to ponder the implications of this in the context of the changing organization models. It makes sense in a diamond-shaped organization that fewer staff will be needed when they are simply reviewing outputs. Likewise, there is a logic in an hourglass model of staff working more autonomously with GenAI tools requiring fewer middle managers and having a “flatter” organization model. But I’d argue that this creates a short-term challenge for organizations relating to the recruitment and training of staff and an even longer-term challenge in terms of promotion and succession planning. The problem is simple and comes in two parts.

Firstly, the adoption of generative AI tools and the automation of traditional entry-level cognitive and creative roles has the practical impact of cutting away the “learning ladder” that people have traditionally climbed in their career paths. If we are going to expect entry-level staff in an organization to become reviewers of outputs and to be capable and competent to evaluate those outputs, make decisions based on those outputs, or take actions informed by those outputs, organizations will need to ensure that there is adequate investment in appropriate training, development, and coaching of those entry-level staff, and an investment in formalizing and structuring knowledge management in the organization. This is essential to replace the experiential learning that is often taken for granted in organizations where junior staff learn by doing and learn what “good” looks like from seeing examples and from “doing the reps” to build that mental muscle.

A second, and related issue, is a question for the future of organizations. Simply put: What is the planned pipeline for middle and senior management and leadership in the organization if we are reducing the number of entry-level and junior-level roles while also reducing the opportunities for experiential learning to develop the kind of knowledge and skills that are needed to operate effectively at those middle and senior levels? And, by way of corollary to the impact on the learning ladder impacts and the need to rethink training and development of entry-level staff, organizations need to consider how to ensure that supervisory managers and organization leaders have the knowledge and experience of the process and data aspects of “doing the work” to be able to provide meaningful coaching and knowledge transfer to entry-level and junior roles.

A third problem in the context of the human factors impact on the organization is the increasingly well-established impact on human cognition and thought processes that arises when people use generative AI to perform tasks involving cognition, analysis, and creative thought.2345 In short, generative AI is profoundly different from previous technologies such as desktop computers and spreadsheets at the dawn of the information age, or the advent of the mechanical loom in the industrial revolution. The key difference is that GenAI represents a fundamental shift in how we cognitively engage with a task. Generative AI enables us to offload the cognitive effort of thinking about the question, the analysis, or the task at hand. We hand off the thinking about the problem to the machine to figure out a solution, source the relevant input data, and produce an output that we can then engage with, or which may automatically form the input into the next stage in a process.

Among the issues identified in research by prominent critics of generative AI such as Microsoft is that higher confidence in GenAI is associated with less critical thinking, whereas higher confidence in the individual’s own capabilities was associated with more critical thinking. Others raise concerns about the impact on quality of analysis and depth of understanding. Michael Gerlich puts it as follows:

“Individuals who depend too heavily on AI to perform analytical tasks may become less proficient at engaging in deep, independent analysis. This reliance can lead to a superficial understanding of information and reduce the capacity for critical analysis.6”

The Pakled Problem

These three issues taken together (How do we train entry-level staff to be supervisors earlier? How do we ensure middle and senior management are able to coach junior staff? And how do we ensure the pipeline of sufficiently skilled managers/leaders, and the potential for negative cognitive impacts of over-dependence on generative AI?) create a bundle of risks for organizations, and society, that I have been referring to with clients and in public talks as the “Pakled Problem.”

For those of you who don’t get the reference, the Pakleds are a species in Star Trek who possess great technology that they don’t understand how to maintain or repair when it stops working, so they must ask for help from the crew of the Enterprise on several occasions to make things go. The strategic risk for organizations is that the human capital development issues outlined earlier in this article risk creating organizations that are akin to the Pakleds where we lack the human capabilities to engage critically with the inputs, processes, and outputs of AI-enabled processes and to intervene effectively to mitigate risks, remediate problems, or simply “make things go.”

For readers who don’t get the Star Trek reference, an equivalent metaphor would be the overweight humans on the spaceship in the movie Wall-E, who also couldn’t understand how things worked because the ship did everything for them and they didn’t have to think about things.

Strategic Thinking

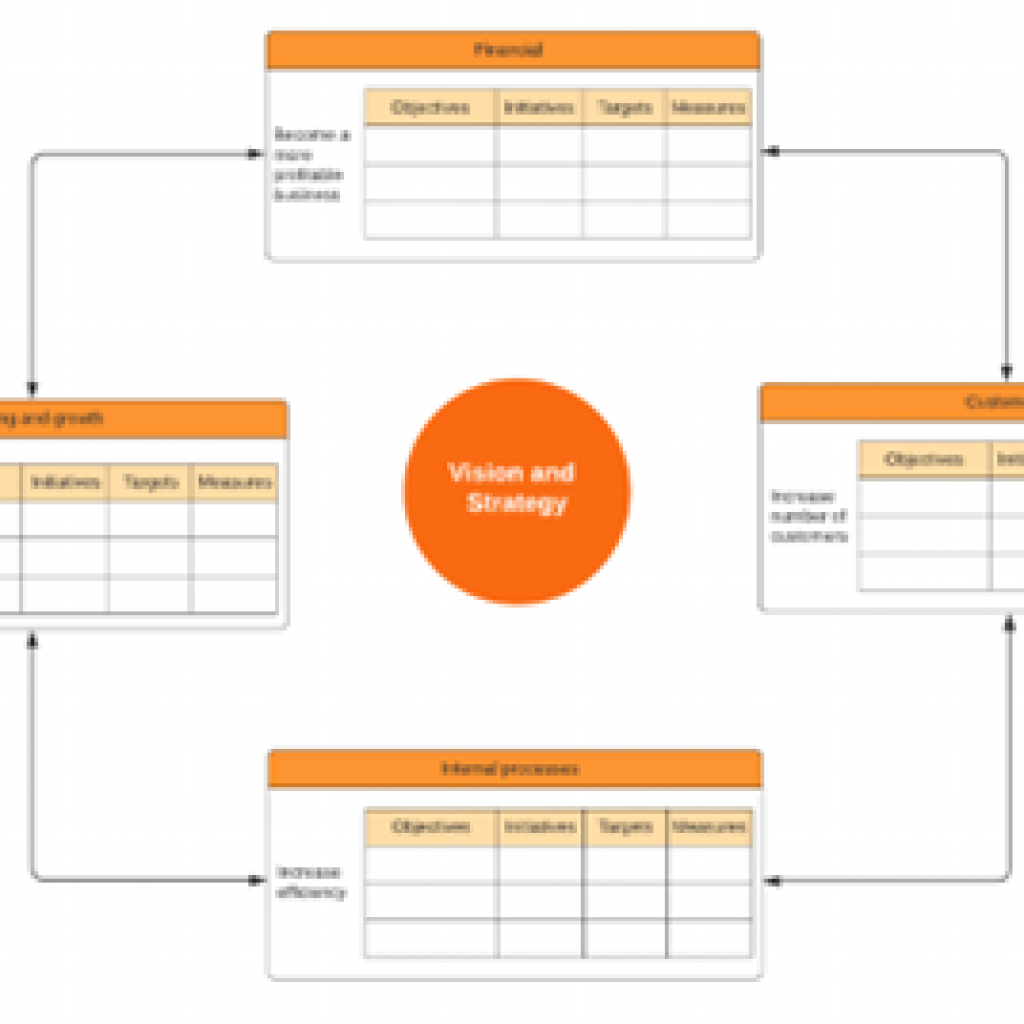

These questions matter from a data and AI strategy perspective if we look at the strategy process through the lens of Kaplan and Norton’s famous “Balanced Scorecard.” The arguments in favour of adopting AI and generative AI are largely grounded on the Process and Financial dimensions of the “Balanced Scorecard.” However, the issues discussed so far in this article fall firmly into the Learning and Growth bucket in the “Balanced Scorecard,” specifically into the domain of Human and Information Capital development as part of the strategy mapping process.

Bear in mind that applying generative AI to an existing process is not innovation — it is process improvement. So, when we improve that process, the “Balanced Scorecard” suggests that we need to understand the impact on how the organization learns and develops that human capital. The Pakled Problem is the strategic challenge we need to address in that context.

The problem is that the AI salesman hasn’t answered the questions about how North Haverbrook solved that problem.

Developing a Method to Engage the Human

As part of our work with clients and our internal R&D on how to use AI responsibly, my company is developing an approach to help promote and maintain human engagement with AI and generative AI processes, and also to ensure that organizations avoid tunnel vision on the technology and consider broader issues and antecedents such as data quality, metadata quality, data access and permissions, and human oversight and control in their planning and execution of these tools in a way that accentuates the positives of both human and tool in practice.

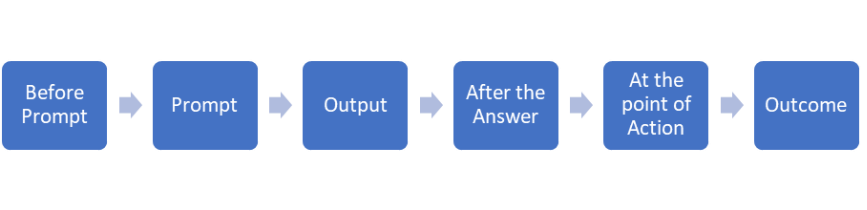

Rather than focusing on a GenAI process life cycle defined by the prompt, its output, and the outcome, we encourage clients (and ourselves) to think of some additional lifecycle stages where we may need to invest in people, process, or data capabilities to mitigate risk and improve the quality of implementations.

These additional stages, and some example considerations, are:

Before the Prompt…

- We need to think about data and AI literacy of staff to ensure that they can understand data structures, data definitions, metadata, and how processes work.

- We need to ensure we have invested in critical evaluation and critical thinking skills for staff at all levels.

After the Answer…

- Do our teams have the appropriate skills and competencies to engage in critical assessment of outputs?

- Do they have the research skills and evaluation skills to identify errors, supplement information, and correct for error and bias in the outputs produced?

- Do our teams have the ability to apply healthy scepticism, common sense, and iterative analysis to improve clarity of and accuracy of outputs?

At Point of Action…

- What is the individual confidence in their own abilities and knowledge and are they confident to over-rule the machine if they believe the output is wrong?

- Do we have systems and processes in place that will enable humans to apply human judgement in decision making based on the AI-generated outputs?

Tying It All Together

Organizations face a risk of AI atrophy unless an appropriate balanced scorecard approach is taken to investment in AI and generative AI solutions. This means we need to make sure we are investing properly in people as well as technology, and the impacts of reducing entry-level roles and opportunities for learning need to be recognized as having potentially long-term impacts on the sustainability and resilience of organizations. Short-termism in strategy and business case planning in the introduction of AI-enabled automation and augmentation of processes risks cutting away the rungs on the learning ladder we have all traditionally climbed as we have developed our careers.

But this simply means that, in practice, we need to invest more, earlier, in developing those capabilities in a formalized and intentional way as part of a balanced scorecard approach that recognizes the importance of the Learning and Innovation dimension and the importance of developing the Human and Information Capital of the organization. It means ensuring we develop the skills and competences needed before the prompt, after the answer, and at the point of action. We need to build a new form of learning ladder in our organizations to help empower people and retain knowledge rather than merely mimicking intelligence.

Organizations that don’t embrace this challenge may find themselves in the future having to kidnap the chief engineer of one of their competitors to help fix their engines and make them go again.

[1] Gen AI at Work: Shaping the Future of Organisations, Cap Gemini, May 2024, https://www.capgemini.com/wp-content/uploads/2024/05/CRI_Gen-AI-in-Mgmt_Final_web-compressed-2.pdf

[2] Lee, H.P., Sarkar, A., Tankelevitch, L., Drosos, I., Rintel, S., Banks, R., & Wilson, N. (2025). The Impact of Generative AI on Critical Thinking: Self-Reported Reductions in Cognitive Effort and Confidence Effects From a Survey of Knowledge Workers. In CHI 2025. https://www.microsoft.com/en-us/research/publication/the-impact-of-generative-ai-on-critical-thinking-self-reported-reductions-in-cognitive-effort-and-confidence-effects-from-a-survey-of-knowledge-workers/

[3] AI’s cognitive implications: the decline of our thinking skills?, IE University, https://www.ie.edu/center-for-health-and-well-being/blog/ais-cognitive-implications-the-decline-of-our-thinking-skills/

[4] Dolan, E.W., “Catastrophic Effects”: Can AI turn us into imbeciles? This scientist fears for the worst”, PysPost.org, 13th Feb 2024 https://www.psypost.org/catastrophic-effects-can-ai-turn-us-into-imbeciles-this-scientists-fears-for-the-worst/

[5] Westfall, C, The Dark Side Of AI: Tracking The Decline Of Human Cognitive Skills, Forbes.com, 18 Dec 2024, https://www.forbes.com/sites/chriswestfall/2024/12/18/the-dark-side-of-ai-tracking-the-decline-of-human-cognitive-skills/

[6] Gerlich, M. (2025). AI Tools in Society: Impacts on Cognitive Offloading and the Future of Critical Thinking. Societies, 15(1), 6. https://doi.org/10.3390/soc15010006