Financial Justification

From the perspective of the business, sooner or later, costs should go down and revenues and profits should go up as a result of an investment. In the case of IT, it has not always been possible to provide the direct linkage between the investment in a project or solution and the financial return. This may be a result of the way IT costs are captured and accounted for, or not captured at all. For some businesses, IT is only a cost center allocated to business units as a part of overhead. Additionally, possible revenue impacts may also be masked by other contributing business factors, including that many IT organizations are not identified as centers that can impact revenue.

[Publisher’s Note: The first part of this series can be found here!]

Notwithstanding unique business needs or requirements, most business success relies on high speed, high quality, and affordable cost. There is a corollary set of factors for the data the business runs on, specifically timeliness, integrity, and cost. Data is pervasive to every business process, so these factors or properties of data can and do impact cost and revenue in essentially every operation and location of the business. These corollaries can be seen from a systems perspective:

| Business | Data |

| Speed: How can the business reduce response times? | Timeliness: How can data be available when needed? |

| Quality: How can the business’ products reach a higher level of customer acceptance? | Integrity: How can errors in data be eliminated? |

| Cost: How can the cost of the business be managed? | Cost: How can the data systems be efficient and effective? |

It was these data properties that Company X failed to manage.

Data, like other business assets, has a life cycle.

Whether understood and controlled or not, data is created, serves its business function, and is ultimately retired. At the peril of the business, this life cycle needs to be well conceived and used effectively. Data Life Cycle Management, DLCM, includes data modeling as well as management and is a systems approach IT managers can use to address the three factors of data: timeliness, integrity, and cost. The DLCM approach provides a different and improved foundation for business data. Besides providing the right data, when and where needed, it provides a stable platform that supports controlled and responsive change management as well. It also provides data that is predictable and auditable. Each step in the life cycle contributes to these three factors and ultimately to successful financial performance.

DLCM has seven concepts or steps:

- Business planning,

- Business agility

- Requirements

- Analysis

- Design

- Deployment and operations

- Governance and risk.

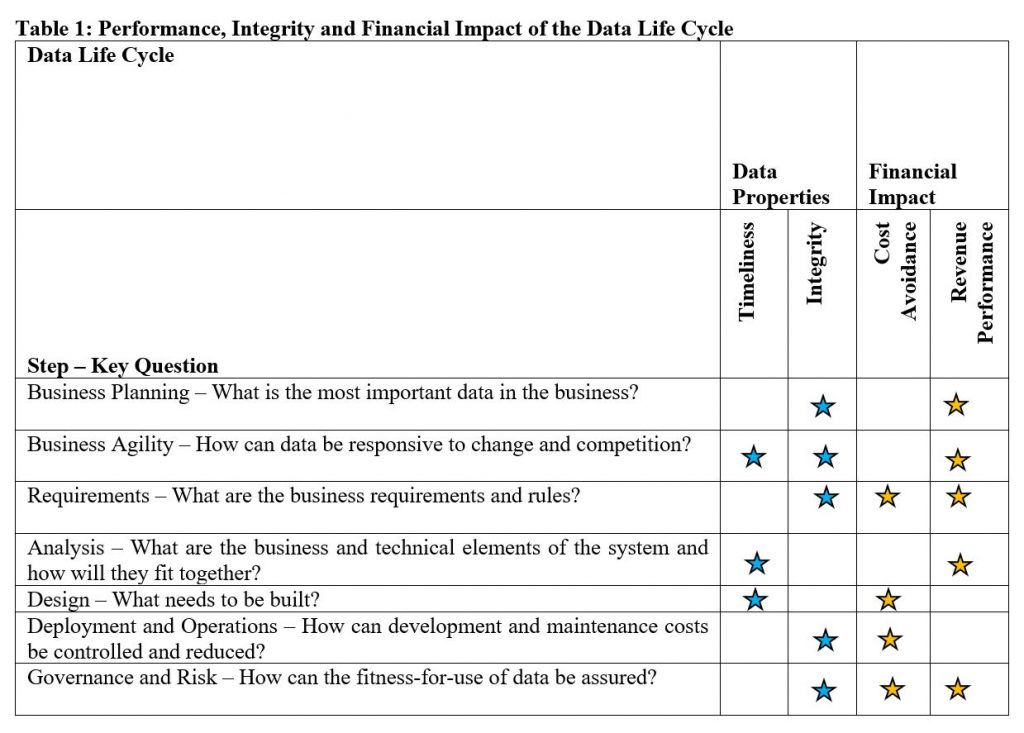

DLCM is the “floor plan” of the “software factory,” while data modeling, meta-data management and change management tools, modeling methods, and standards and best practices are the factory equipment that produce the data architecture the business uses. Properly implemented, each step plays a role in achieving data timeliness or integrity or both. Where these seven steps primarily impact these data properties and financial performance is shown in Table 1, below.

Table 1: Performance, Integrity and Financial Impact of the Data Life Cycle

Business Planning and Business Agility: The process of defining the data used in business, i.e. data modeling, starts at the beginning of the data life cycle. These steps are the responsibility of the business. These steps identify what important data is needed by the business. They also identify how agile the data has to be, specifically where the data needs to be, and when it needs to be there to serve the business.

Requirements: An effective definition of detailed business requirements can have a direct impact on both the cost of doing business and the revenue-profit performance. The history of software development is that poorly defined requirements lead to bad results There is also the potential of lost revenue through losing dissatisfied customers. This is as true for data as for any other part of the information architecture. It is the leading cause of data being unfit for use. The additional costs associated with repairing database systems and other remediation can be quite high, especially if the source of the bad results, poor requirements definition, is not well understood. Data modeling as a discipline places its prime focus in this area using logical modeling for requirements definition, analysis, and documentation. The corresponding physical modeling is used to capture technical implementation requirements. When used in accordance with corporate and industry standards, modern data modeling technology provides data systems that have higher accuracy, faster response times, and lower costs of ownership.

Analysis and Design: These steps determine the technical implementation including database management system selection and network implementation. Physical modeling of the data and associated design activities can impact the performance of the system, specifically whether the data will arrive at the proper business destination when needed. Without adequate volumetric information and physical modeling of data structures such as data warehouses, the data systems may not be sized correctly. This can lead to capacity issues and/or inadequate performance that requires expensive retrofits and upgrades.

Deployment and Operations: Maintaining a data system that has been deployed can be costly when business-driven changes or undiscovered problems occur. Cost effective change management is best supported by managing and controlling the business data requirements and data design. The modern data modeling environment can and should provide the traceability of data in each of the steps in the life cycle. Along with proper requirements definition, this is the single most important step in controlling and reducing the cost of ownership of data.

Governance and Risk: A properly defined, designed, and implemented data system is essential to providing data that is fit to use. A substantial number of businesses are also required to comply with regulations and policies from external agencies. Any business in the transportation, healthcare, energy, or financial sector, for example, continues to see a growing list of requirements for oversight. That oversight often reaches to the detail level of the data, what it is, and how and where it is used. For the sake of maintaining overall financial health, businesses also impose their own internal policies and standards. The costs associated with failing to comply with regulatory requirements can be substantial, up to and including cessation of business operations. The risks of this kind of disaster are substantially reduced by using data modeling and data management technology, standards, and methods to provide a traceable and managed data life cycle.

Other Intangible Justifications

There are also non-financial benefits to implementing a data life cycle environment. IT managers and their business partners are likely to see a behavior shift in their respective organizations. Having the proper tools and methods available to develop solutions and solve problems often increases the responsiveness and creativity of organizations. Here are some observed changes that are influenced by implementing good methodology and technology:

- Improved communications

- Improved understanding of the business and its requirements

- Higher level of alignment and coherence between and within organizational groups

- Adoption of things that support the business and rejection of things that don’t

- Increase in creativity and a pro-active attitude

- Improved collaboration

Although typically not quantifiable, organizations that exhibit these behaviors are recognized for their excellence, performance, and appeal as a workplace.

Mastering Data is Essential

Company X is just one example of what businesses in general are facing with the expansion and increasing importance of data. On a larger scale, companies are embarking on Master Data Management Initiatives to address such problems as time to market, acquisitions and mergers, data sharing among integrated business processes, and regional and global business integration. The emergence of these kinds of tasks is driven by the business side of the business-IT relationship. Methodologies, data life cycle management, and modeling tools to aid these tasks are available. Whatever the scale of a Master Data Management Initiative, the atomic structure of data needs to be definable, understandable, usable, standardized, and manageable. Master Data managers and stewards will want to identify qualified data management tools that not only capture all relevant metadata inherent in well engineered database systems, but also provide the ability to automatically compare, standardize, and control data across the business. Master Data Management Initiatives will not be technically or economically achievable without those capabilities.

Bottom Line

Bad data is not affordable. IT managers who use a Data Life Cycle Management approach, equipped with the proper methodologies, data modeling, and data management tool sets, have the distinct advantage of providing better support to their business by providing data that is “fit for use.” The DLCM approach also provides linkage between the data in the business, how that data is constructed, and its impact on financial performance. IT managers who use DLCM will have significantly better control of the data “manufacturing” process and the ability to effectively articulate their IT strategies more effectively and show improved returns on investment.