Algorithms guide our daily lives. Today’s seemingly simple functions, such as a GPS app’s predicted arrival time or the next music in the streaming row, can be filtered by algorithms of AI and ML.

However, their capacity to keep these promises is dependent on data annotation: the act of precisely categorizing information to educate artificial intelligence to draw conclusions.

The workhorse of our algorithm-driven world is data annotation.

What is Data Annotation?

We must tell the computers what they will interpret and give context to make decisions because they cannot process visual information the same way human brains do. Those relationships are made via data annotation. Data annotation is the human-led activity of labeling content like text, photos, and video so that machine learning models can recognize it and utilize it to generate predictions.

When we consider the current rate of data generation, data annotation is both a crucial and impressive effort. According to the Visual Capitalist, by 2026, an estimated 464 exabytes of data would be created globally on a daily basis. Moreover, according to global market Insights, the worldwide data annotation tools market is expected to increase approximately 40% annually over the next six to seven years, particularly in the automotive, retail, and healthcare industries.

Some of the great open-source tools to help you automate your labeling process include Amazon SageMaker ground truth, MathWorks ground truth labeler app, Intel’s computer vision annotation tool, Microsoft’s visual object tagging tools, and Scalable by DeepDrive, etc.

Types of Data Annotation

Each form of data has its labeling procedure, so here are a few examples of the most widespread types:

Semantic Annotation

Semantic annotation is the practice of labeling concepts such as persons, places, or firm names within a document to assist machine learning models in categorizing new concepts in future texts. It is a critical component of AI training for improving chatbots and search relevancy.

Image Annotation

This sort of annotation ensures that machines perceive an annotated area as a different item. It frequently involves the use of bounding boxes and semantic segmentation. These labeled datasets can be used in applications as diverse as self-driving cars, facial recognition models, etc.

Video Annotation

Video annotation, like picture annotation, uses techniques like bounding boxes to recognize movement on a frame-by-frame basis or via a video annotation tool. Data gleaned from video annotation is critical for computer vision models that do localization and object tracking.

Text Categorization

Text categorization is the process of assigning categories to sentences or paragraphs within a given document based on the topic.

Why Does Data Annotation Matter?

The foundation of the user experience is data. The level of familiarity you have with your clients directly impacts the quality of their experiences. As long as organizations gain more insight into their customers, artificial intelligence can assist in making the data obtained actionable. According to Gartner, 75 percent of consumer contacts will be filtered by technology, like machine learning applications, chatbots, and mobile texting by 2023.

Artificial intelligence interactions will boost text, sentiment, voice, interaction, and even traditional survey research. However, organizations must ensure high-quality datasets underlying these decisions for chatbots and virtual assistants to provide seamless consumer experiences.

According to a survey conducted by data science platform Anaconda, data scientists are now spending a large percentage of their time in data preparation. A part of that time is spent in correcting or deleting inconsistent data and ensuring measurements are exact. In addition, these tasks are critical because algorithms heavily depend on pattern recognition to make judgments, and inaccurate data can lead to biases and poor predictions by artificial intelligence. So, building SuperData, i.e., incredibly high-quality training data, will go a long way.

How Does Data Annotation Work?

We need to provide data annotations or data labels to train the machine learning model in supervised learning. Data annotation provides the label or region of interest in the particular dataset. Due to data annotations, developers get the object’s ground-truth value that can help check the accuracy of any classification, detection, and segmentation model. Several automated techniques can assist in identifying the data, as labeling it manually would be a monumental undertaking.

How Can Data Annotation Power Driverless Cars?

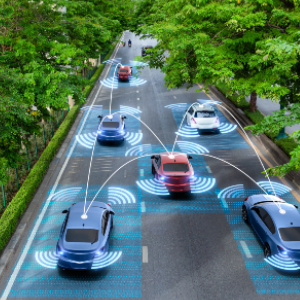

To develop the autonomous capabilities in driverless cars, algorithms that operate the driverless cars should be trained to detect, follow, and categorize objects and make decisions for path planning and safe routing (navigation). Due to the several existing sensors and cameras, advanced automobiles generate a tremendous amount of data. We cannot use these datasets effectively unless they are correctly labeled for subsequent processing. These data sets must be used as part of a testing set to develop training models for autonomous vehicles. Some of the most important aspects of data annotation and computer vision for autonomous vehicles are given here:

Pothole Detection

Road safety is one of the important areas that grab the attention of automakers. Improvements are needed to increase road safety. Potholes are mostly there on roads and need to be detected to make driverless cars more efficient. So here, semantic segmentation is needed to tackle this problem.

Voice Assistant

Automakers saw the trend of utilizing mobile devices for navigation to improve the passenger experience and began incorporating sophisticated voice-enabled assistants into their vehicles. According to Voice Bot’s data, usage of in-car voice assistants increased by 14.8% between September 2017 and January 2019. The use of voice assistants is growing rapidly, due to which the demand for high-quality training datasets keeps on increasing to ensure a smooth experience.

For example, Alexa is used to control the environmental settings of Lamborghini, which includes air conditioning, heat sensor, fan speed, temperature, seat heaters, lightening, and direction of airflow. The artificial intelligence in the voice assistant can also deduce what people mean from less clear requests, such as putting on heat or AC when the driver indicates they are too hot or cold.

Localization

Localization is considered a fundamental precondition for making effective decisions about where and how to navigate by determining a vehicle’s exact position in its environment. HD mapping compares an AV’s perceived environment with onboard sensors (including GPS) with comparable HD maps. It gives a reference point for the vehicle to determine exactly where it is and in which direction it is traveling.

For example, HD live map employs machine learning to check map data against the real world in real-time.

Key Takeaways

Artificial intelligence in self-driving cars is a never-ending sea of discoveries and constantly evolving technological twists and turns. However, autonomous driving would have been impossible without cutting-edge datasets and a robust computer vision, which equates to the requirement for a constantly rising workforce and respective difficulties for your model to excel.

The process of gathering datasets, labeling data, object detection, semantic segmentation, tracking of objects for the control system, 3-dimensional scene analysis, and depth assessment are the main challenges when training a computer vision model for autonomous cars.