My recent journey along the Nile River in Egypt sparked an unexpected professional revelation. Standing on the deck of a cruise ship, I watched as the narrow blue lifeline — representing just two to four percent of Egypt’s land — sustained an entire civilization in an otherwise harsh desert landscape. For millennia, Egyptian civilization has thrived by developing increasingly sophisticated methods to channel, store, and distribute the Nile’s waters.

Similarly, in today’s enterprise landscape, the quality of AI systems depends fundamentally on the data that flows through them. While most organizational focus remains on AI models and algorithms, it’s the often-under-appreciated current of data flowing through these systems that truly determines whether an AI application becomes “good AI” or problematic technology. Just as ancient Egyptians developed specialized irrigation techniques to cultivate flourishing agriculture, modern organizations must develop specialized data practices to cultivate AI that is effective, ethical, and beneficial.

My new column, “The Good AI,” will examine how proper data practices form the foundation for responsible and high-performing AI systems. We’ll explore how organizations can channel their data resources to create AI applications that are not just powerful, but trustworthy, inclusive, and aligned with human values. The column will provide practical guidance for ensuring that your AI initiatives — such as establishing responsible AI governance — deliver genuine value rather than amplifying existing problems.

Practical First Steps in Your AI Governance Journey

As organizations increasingly integrate artificial intelligence into their operations, the need for robust AI governance has never been more critical. However, establishing effective AI governance doesn’t happen in a vacuum—it must be built upon the foundation of solid data governance practices. The path to responsible AI governance varies significantly depending on your organization’s current data governance maturity level.

This article explores three practical starting points and approaches to establishing AI governance, each tailored to different organizational readiness levels. These are actionable strategies designed to get your AI governance off the ground from wherever you are today. By understanding where your organization stands, you can immediately begin implementing the most effective approach toward comprehensive AI governance.

Understanding Your Organization’s Governance Maturity

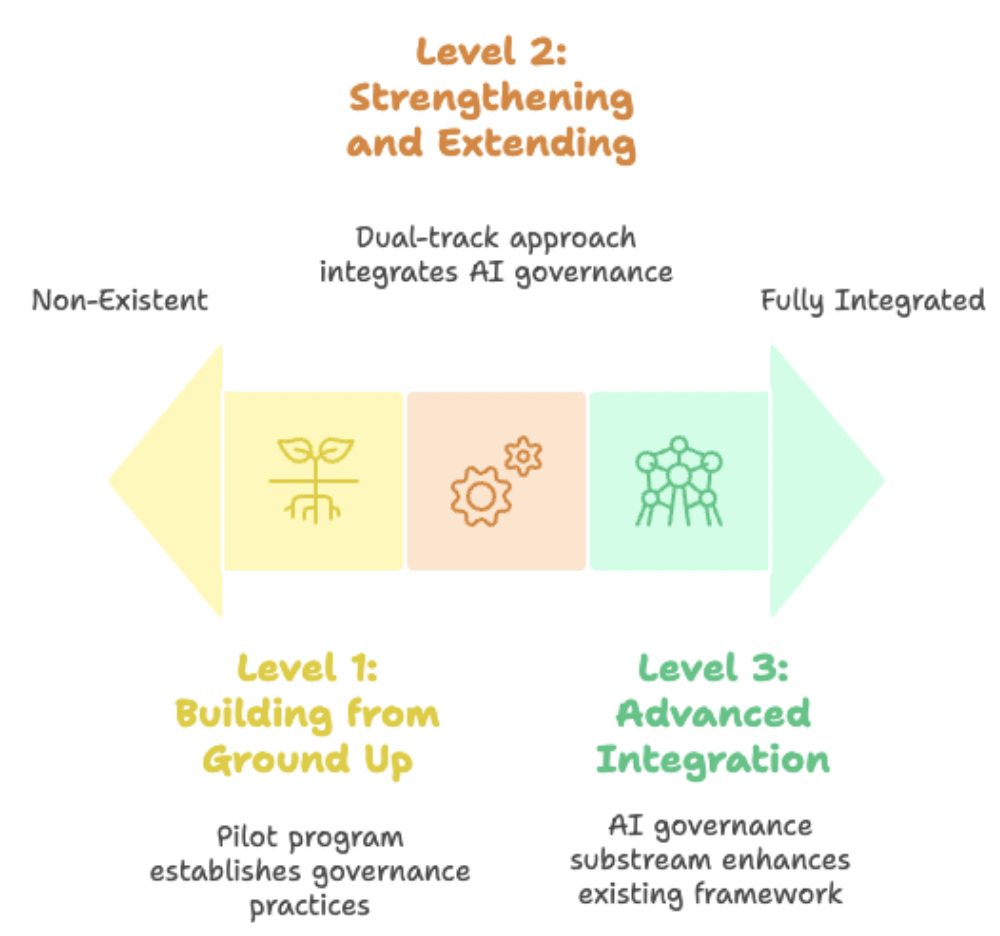

Before diving into implementation strategies, it’s essential to assess your organization’s current data governance maturity. We can broadly categorize organizations into three levels:

Level 1: Non-Existent Governance — Organizations with little to no formal data governance practices in place.

Level 2: Partially Established Governance — Organizations where certain teams have implemented some governance protocols, but practices aren’t standardized across the enterprise.

Level 3: Fully Established Governance — Organizations with comprehensive, well-established data governance frameworks already operational.

Tailored Approaches by Maturity Level

Level 1: Building from the Ground Up (Non-existent Governance)

Organizations starting from scratch face unique challenges, but also have a significant advantage: the opportunity to integrate AI governance seamlessly within their emerging data governance framework.

Getting Started: The Pilot Approach

Rather than attempting to build comprehensive governance from day one, begin with a targeted pilot program. Identify an existing AI initiative within your organization — perhaps a team looking to implement and test an AI project. This becomes your proving ground for governance practices.

Establishing Your Governance Group

Form a governance working group with carefully selected stakeholders who represent critical perspectives:

- Product owners who understand business requirements

- Data producers who generate the source information

- Data, AI, and technical architects who design data systems, AI products, and integrations

- Critical data engineers who manage data pipelines

- Security professionals who ensure data protection

- Data analysts who understand data quality and usage patterns

This diverse committee ensures that governance decisions consider all aspects of the AI lifecycle, from data collection to model deployment and monitoring.

Level 2: Strengthening and Extending (Partially Established Governance)

Organizations with some existing governance practices are in a strong position to expand their capabilities to include AI-specific requirements.

Identifying AI Integration Points

Begin by conducting an inventory of AI projects that teams are currently leveraging or planning to implement. This assessment helps you understand where AI governance intersections with existing data governance are most critical.

Dual-Track Approach

The most effective strategy at this maturity level involves establishing a separate AI governance process that works in conjunction with existing data governance processes. This parallel approach offers several advantages:

- Preservation of Existing Systems: Your current data governance practices remain undiluted while you strengthen and expand them.

- Focused AI Development: AI-specific practices can take shape and build momentum without being constrained by existing frameworks.

- Future Integration Potential: As both processes mature and demonstrate synergy, they can eventually be combined into a unified governance framework.

Key Personnel Identification

Focus on identifying individuals actively involved in AI model development, including AI architects, data architects, and specialized engineers. When these roles overlap with your existing data governance team members, it creates natural bridges between the two governance streams.

Level 3: Advanced Integration (Fully Established Governance)

Organizations with mature data governance frameworks can take a more sophisticated approach to AI governance integration.

AI Governance Substream Strategy

Establish a dedicated AI governance substream within your existing framework. This specialized branch should focus on:

- AI-Specific Best Practices: Develop standards tailored to machine learning workflows and model lifecycle management.

- Policy Enhancement: Upgrade existing security and data policies to address AI-specific risks and requirements.

- AI Data Cataloging: Implement specialized cataloging practices for training data, model artifacts, and AI outputs.

- Testing Methodologies: Establish comprehensive testing frameworks for AI models, including bias detection and performance validation.

- Data Use and Retention: Define specific protocols for AI training data usage, storage, and retention.

Establishing Regular Governance Cadences

Implement regular review cycles that ensure stakeholders remain engaged and informed about:

- AI use cases and applications across the organization

- Data sources and quality standards for AI models

- Model performance gaps and improvement opportunities

- Iterative results and data gap identification

- Compliance and risk assessment outcomes

Implementation Framework: Core Components

Regardless of your starting maturity level, certain fundamental components must be established for effective AI governance:

Data Cataloging and Dictionary Practices

Begin by establishing comprehensive cataloging practices for all data intended for AI model development. This catalog should include:

- Data Lineage: Complete traceability of data sources and transformations

- Quality Standards: Defined metrics and thresholds for data quality across all model inputs

- Master Data Definitions: Standardized definitions and classifications for all data elements used across AI models

- Common Reference Data Management: Centralized management of lookup tables, codes, and reference datasets used by multiple AI systems

- Usage Documentation: Clear specifications of how data is intended for use within models

- Derivation Tracking: Documentation of any derived datasets or feature engineering processes

- Gap Analysis: Identification of data gaps and external data requirements

Model Governance Framework

Develop a comprehensive understanding of your AI models that encompasses:

- Model Capabilities: Clear documentation of what each model can and cannot do

- Expected Outcomes: Defined success metrics and performance targets

- Training and Testing Data Distribution: Comprehensive analysis of data used for model development and validation for bias detection at every level

- Intended Users: Clear identification of who will use the model and how

- Usage Policies: Explicit guidelines covering appropriate and inappropriate use cases

Monitoring and Compliance Systems

Establish robust monitoring capabilities that track:

- Usage and Output Monitoring: Integrated systems to track how models are being used in production and how model outputs are being utilized

- Input Monitoring: Mechanisms to monitor what data is being fed into models

- Performance Monitoring: Continuous assessment of model performance and drift

- Compliance Verification: Regular audits to ensure adherence to governance policies

Moving Forward: Building Sustainable AI Governance

Establishing responsible AI governance is not a one-time project but an ongoing organizational capability. Success requires commitment to continuous improvement, regular assessment of governance effectiveness, and adaptation to evolving AI technologies and regulatory requirements.

By aligning your AI governance approach with your organization’s current data governance maturity, you can build a sustainable framework that grows with your AI capabilities while maintaining the trust and transparency that responsible AI demands.

The key is to start where you are, use what you have, and build systematically toward comprehensive AI governance that serves both your business objectives and your ethical obligations to stakeholders and society.