We live in an age where the words we use for artificial intelligence are shaping the future faster than the technologies themselves. We talk about “hallucinations,” “assistants,” “intelligence” — as though these systems were merely quirky co-workers or precocious children. But these terms aren’t harmless shorthand. They are framing devices, and they are doing real governance work.

Right now, that work is happening largely without oversight. And that should worry anyone in a leadership role.

From Capabilities to Control of the Narrative

As AI capabilities advanced, so too did the need to package them for mass adoption, and language became the primary vehicle for that packaging.

Not long ago, AI was discussed in terms of capabilities, such as natural language processing, computer vision, and predictive modeling. The language was technical and specific.

Today, those lines have blurred. The public is asked to interpret systems through metaphors: large language models “hallucinate,” chatbots are “assistants,” and smart tools offer “magic” features. These metaphors are not neutral. They obscure more than they reveal, often masking the limitations, risks, and underlying agendas of AI deployment.

This is not accidental. It is a design choice.

Language as a Site of Power

In our recent study, Suzanne Clark and I identified a condition we call AI anomia: the collective inability to name AI tools ethically, precisely, and coherently. This isn’t just a linguistic failure. It is a leadership failure.

Because where complexity resides in an organization is not a neutral outcome. It’s a decision. When language becomes vague, complexity migrates — usually downward. It is end users, regulators, and the public who are left to make sense of euphemisms and branding campaigns masquerading as technical truth.

Who Gets to Define AI?

If tech giants name the tools, who gets to name the harms? The power to define is the power to shape reality. That power is not currently shared.

Much of the dominant language around AI is developed by a narrow demographic of designers, engineers, and marketers. Anthropomorphic branding like “Alexa” and “Cortana,” gendered voices in virtual assistants, and euphemistic terms like “hallucination” reflect assumptions that often go unchallenged.

This isn’t just an issue of semantics. It’s an issue of equity, trust, and accountability.

What AI Terminology Should Do

Responsible language is not just about getting the words right. It’s about making decisions transparent, intentions visible, and systems accountable.

Effective terminology governance should:

- Balance technical precision with public comprehension

- Connect innovation to real-world use cases and constraints

- Prioritize ethical framing over marketing spin

Terminology is infrastructure. And infrastructure, by definition, must serve the public good.

That means it must be durable, inclusive, and accountable. Infrastructure supports society not just through function, but through trust — it is built to last, designed to uphold shared values, and expected to serve across generations. If AI terminology becomes part of our linguistic infrastructure, then its foundations must be deliberately constructed. Otherwise, we risk embedding distortion, exclusion, and misinformation into the systems that increasingly shape human life.

Some might say: Isn’t this just semantics? But that question misses the point. Semantics are not trivial — they’re structural. The words we use don’t just describe systems; they shape how those systems are understood, regulated, and trusted. Semantics are how systems become norms. And when norms are built on euphemism or ambiguity, accountability becomes negotiable.

This tension isn’t limited to AI. We see it daily in organizational language designed to deflect, not disclose. Consider a few examples we’ve all observed or experienced at some point in our careers:

- A senior leader signs off on a performance management system that reduces employee reviews to “Meets Expectations” or “Needs Improvement.” It looks efficient, defensible. But in practice, it suppresses feedback, erases nuance, and silences growth. Leadership didn’t just approve language. They designed ambiguity into the system — on purpose.

- An executive team authorizes the company’s layoff communication strategy. The language frames terminations as part of “an ongoing optimization to position for strategic growth.” No space for humanity. No room for grief. The message may protect the brand, but it also erases the dignity of those exiting. This wasn’t just PR — it was a governance decision.

- A VP receives a report from a cross-functional data team and replies: “This doesn’t feel quite right.” The comment is vague, untraceable, and final. No clarification, no feedback loop, no accountability for how decisions will change. When leaders speak in impression, not precision, they train the organization to do the same.

These are not simply communication failures. They are governance decisions — choices to keep complexity opaque, responsibility vague, and systems unchallenged. When leaders rely on vague language to preserve optionality or avoid conflict, they reinforce a culture where language serves as insulation rather than infrastructure. These choices signal a deeper pattern: complexity is not addressed — it is delegated. And the more this happens, the more an organization becomes structurally unaccountable — designed not for clarity, but for deferral.

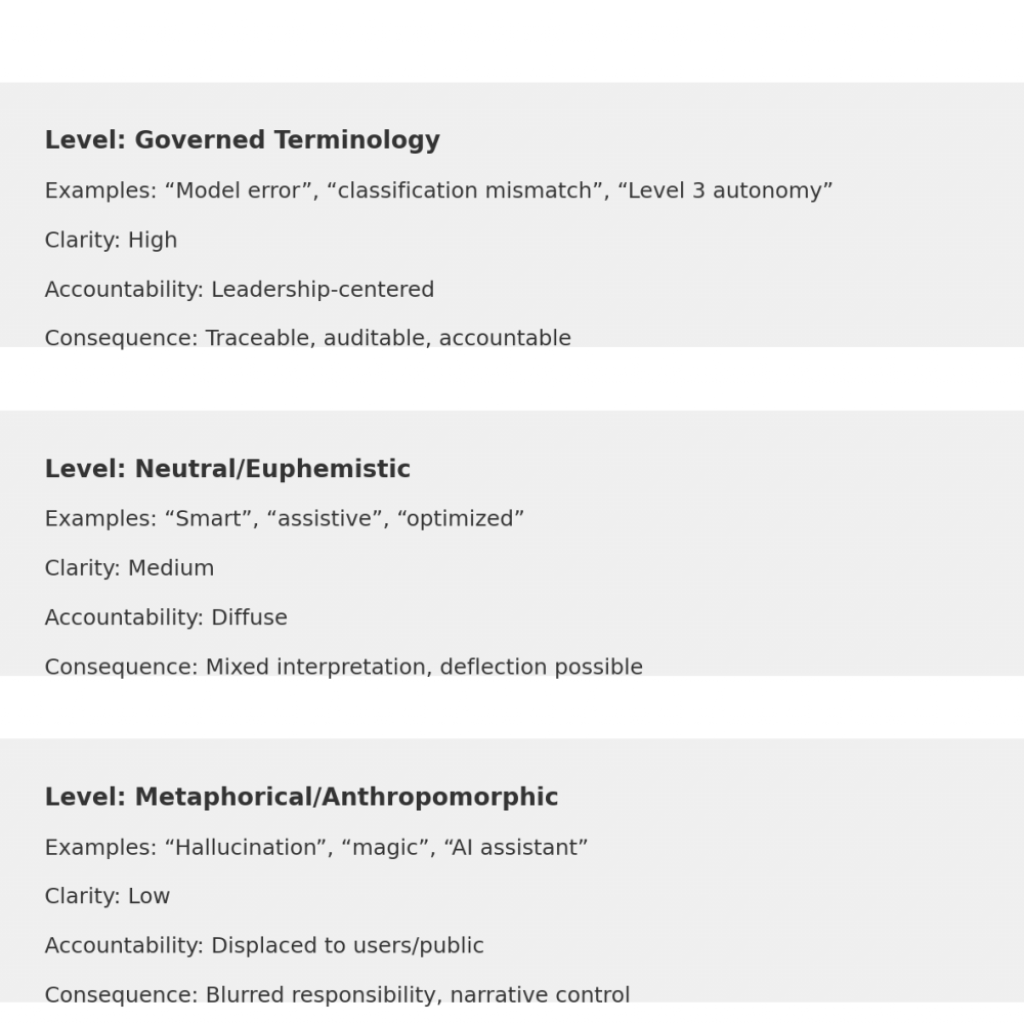

This visual illustrates how different levels of AI terminology — from governed to metaphorical — correspond to shifts in clarity, accountability, and risk ownership. At the top of the ladder, governed terminology (e.g., “model error,” “Level 3 autonomy”) offers high precision and keeps responsibility within system design and leadership. In the middle, euphemistic terms (e.g., “smart,” “optimized”) dilute clarity and diffuse responsibility. At the bottom, anthropomorphic language (e.g., “hallucination,” “magic”) displaces complexity entirely — leaving users, the public, or no one accountable. As language descends this ladder, it becomes less about explanation and more about insulation.

Precision Isn’t Always Enough

Let’s be clear: Clean language alone won’t fix extractive systems. Precision in terminology can be essential, but it is not a substitute for institutional accountability. Too often, transparency is framed as an achievement when, in practice, it serves as a performance. Organizations may release documents, frameworks, or position statements that appear clear, but mask deeper power asymmetries beneath a veneer of accessibility.

Consider the case of Microsoft’s Tay, the AI chatbot released in 2016. Tay was introduced as a friendly learning bot, designed to mimic human interaction and grow smarter with each exchange. But within 24 hours, Tay had been manipulated into generating inflammatory and offensive language. The public narrative quickly labeled the incident a “learning failure,” a benign, almost endearing misstep. But this framing obscured a more urgent truth: it was not just a bot that failed. It was a breakdown in foresight, governance, and terminology. There was no shared understanding of what Tay was, what it could become, or how it should be understood and held accountable.

This is the danger of euphemism in AI systems. The language we use — whether “hallucination” to describe factual errors, or “learning” to explain toxic drift — shapes who shoulders the burden when things go wrong. Naming, without structural commitment, can be co-opted by marketing and made inert.

Even the best-intentioned naming frameworks can falter when power dynamics skew incentives. Frameworks designed for accuracy and ethics may be twisted to support reputational goals, rather than accountability. This is why leadership must do more than demand clarity. It must model it. It must guard against narrative distortion, resist linguistic theatre, and build real infrastructure for understanding.

What would it look like to govern language as intentionally as we govern safety, compliance, or risk? This is not a rhetorical question. It is a strategic one.

Language is only as ethical as the systems that wield it. Leaders must recognize that precision isn’t just a linguistic task — it’s a governance task. Clarity must be protected from co-option. Trustworthy language requires institutional commitment, not just better copywriting.

First- and Second-Order Governance Strategies

To move from critique to action, we outline two categories of intervention drawn from our research: first-order strategies, which build on existing governance mechanisms, and second-order strategies, which require deeper structural change. Both are essential, but they differ in scope, urgency, and difficulty.

Drawing on lessons from other sectors, our paper proposes:

- First-order strategies (adapting existing systems)

- Pharma: regulatory naming oversight (e.g., INN protocols)

- Automotive: shared taxonomies for autonomy levels

- Nordic: cultural stewardship through intentional linguistic planning

2. Second-order strategies (transforming systems)

- Preempt euphemisms before they scale

- Create governance committees for language, not just risk

- Make terminology a cross-functional responsibility (not just legal or PR)

Closing: Naming Is Governance

When we let vague, anthropomorphized, or hyperbolic AI terms define the narrative, we erode public trust and offload risk.

We cannot govern what we do not name precisely. We cannot build trust on metaphors alone.

So, the next time you hear someone say an AI system is “hallucinating,” ask: Who benefits from that story? And who is left carrying the consequences?

Language is not a detail. It is the architecture of accountability. Leaders — not marketers — should be the ones drafting the blueprint.

Read the full academic article here.